[This is the transcript of a lightning talk at RIDE 2024 conference at University of London on 14 March 2024.]

Hello everyone. My name is Matthew Moran, I am Head of Transformation at The Open University, where I am also undertaking an MSc project on technology innovation management, investigating failed ideas and speculative futures in edtech.

My talk is entitled: Emotional AI in education: applications and implications. So let’s go.

What is emotional AI?

Emotional AI is the development and study of computing systems with ‘the capacity to see, read, listen, feel, classify and learn about emotional life’ (McStay, 2018).

Emotional AI systems are systems able to learn about human affective or emotional states, through the application of machine learning and deep learning methods, such as long short-term memory recurrent neural networks.

It’s not a new development: researchers have been building systems to detect, recognise and simulate human emotional states and reactions computationally since the 1990s. See also affective computing.

How does it work?

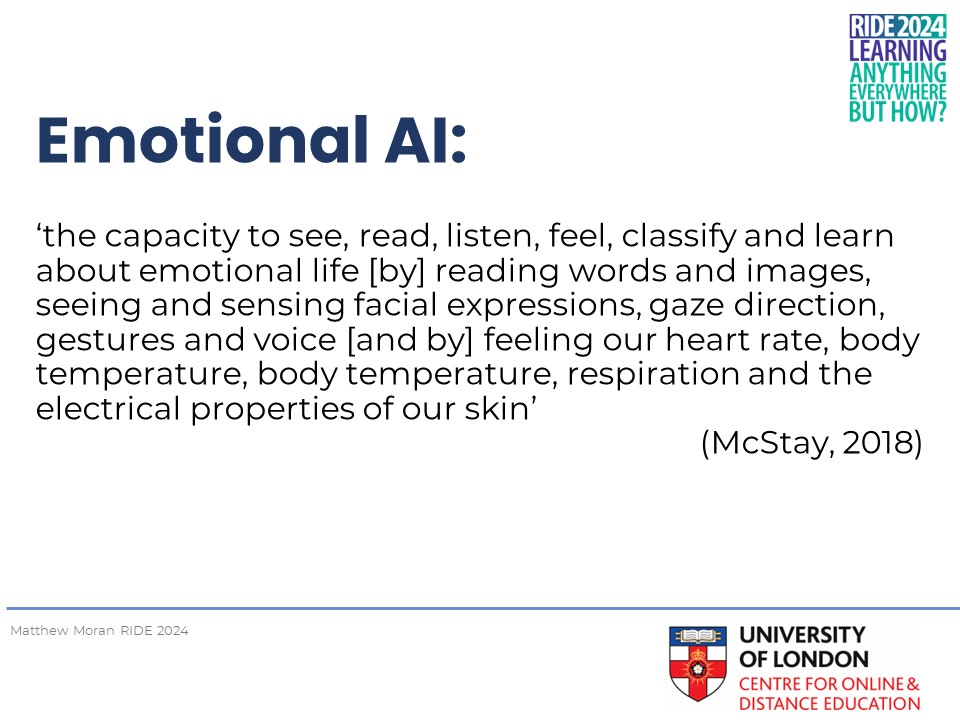

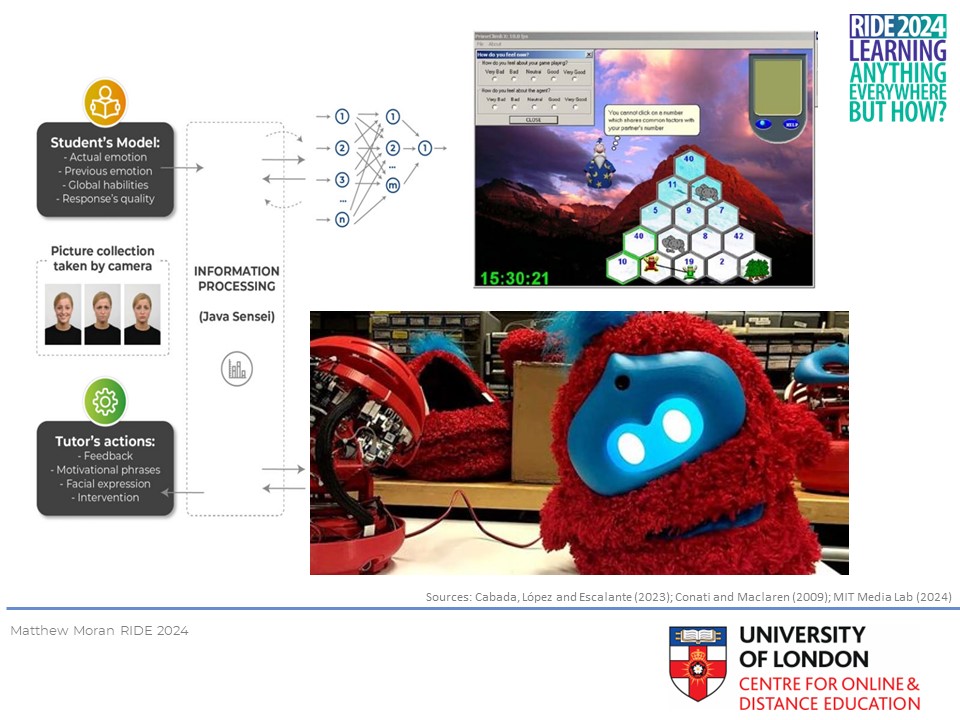

Typically, emotion-aware systems consist of hardware and software models designed to:

- Collect emotion data from users (including from face, voice, text and physiological signals)

- Then classify emotions by preparing, processing and fusing this data

- From this they are able to recognise emotions

- They then generate emotion-aware responses

- And finally they express these responses through system outputs.

In the current state of the art, it is common to see end-to-end learning systems able to process raw, unlabelled data using general unsupervised representation learning (a machine learning technique for identifying patterns in large volumes of data).

And we are also seeing systems able to pre-train to process data from one mode (say, audio signals) using labelled data from another mode (such as images), by using generative adversarial networks (in which two neural networks compete with each other to become more accurate in their predictions).

Why is this significant?

Well, imagine a computer game for learning maths, able to detect when learners are experiencing emotions that impede learning, such as anxiety, confusion or frustration, and then adapt the learning experience to prevent learners disengaging and perhaps going on to develop limiting beliefs about their abilities in maths, as often happens.

Emotion-aware systems have been developed and integrated into learning systems in education since the early 2000s, in the form of intelligent tutoring systems, serious games, and social robots.

Certain benefits have been attributed to these systems by researchers, including improvement in learner engagement, motivation, comprehension, information processing and recall, self-concept, self-efficacy and mastery orientation.

Despite the promise of these studies, momentum was lost in the field in the mid 2010s, particularly with the decline of intelligent tutoring systems.

But there has been a resurgence of activity in the current decade, and right now there is intense research and development activity, for example in applications of affective AI in immersive virtual environments, in Virtual Humans, and in emotionally conscious AI games and personal assistants.

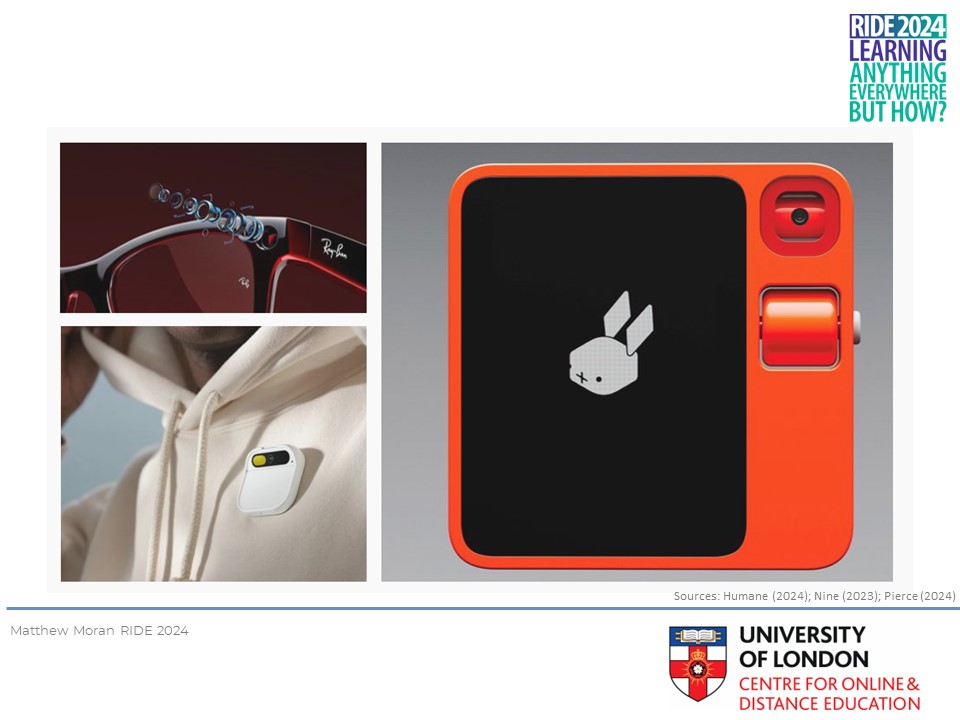

Emotion-sensitive systems are now converging with multi-modal machine learning models, and consumer hardware such as smart glasses, extended reality headsets, and other mobile and wearable AI-enabled devices.

Smart glasses, such as Meta’s augmented reality Ray-Bans, provide wearable AI experiences and personal voice-controlled co-pilots. Imagine a pair of glasses that knows when you’re trying to focus and is able to help you switch context at the right time to remain in the flow of study.

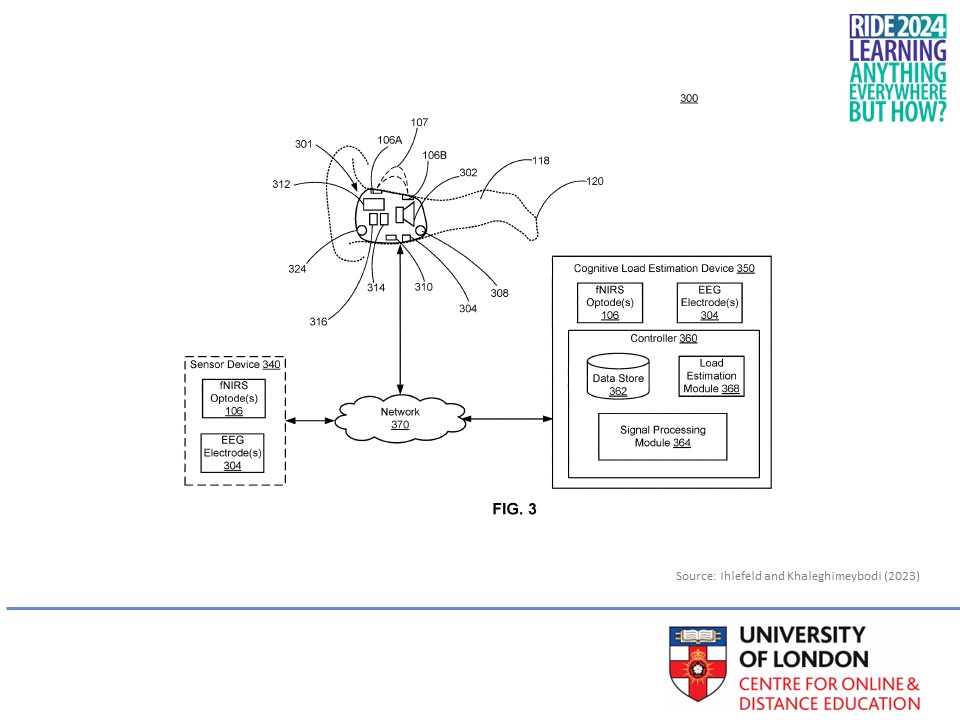

Both Apple and Meta have recently submitted patent filings for in-ear sensors able to detect electrical signals in the brain using functional near-infrared spectroscopy. Expect to see these soon in smart glasses, headsets, and earbuds.

Louis Rosenberg (2024) has coined the phrase ‘augmented mentality’ to refer to this convergence of emotional and generative AI in consumer products which now cost as little as 180 euros.

Just think of the affordances of this for personalised, context-sensitive, adaptive and multisensory learning experiences. Talk about ‘learning everything anywhere’!

So we need to be thinking about how we will design learning for ‘augmented mentality’.

Are we ready?

Do we trust the vendors?

Or are we happy that the European Union AI Act now bans emotion-detection systems in educational institutions?

Professor Michael Wooldridge (2021) has described the history of AI as one of ‘failed ideas’.

Emotional AI may become yet another failed idea. But we can also see it as a speculative future.

If we imagine that these technologies will become mainstream, what are the possibilities, and what are the implications for how we design learning experiences? What are the next-order effects? And what do we need to do to prepare for all this?

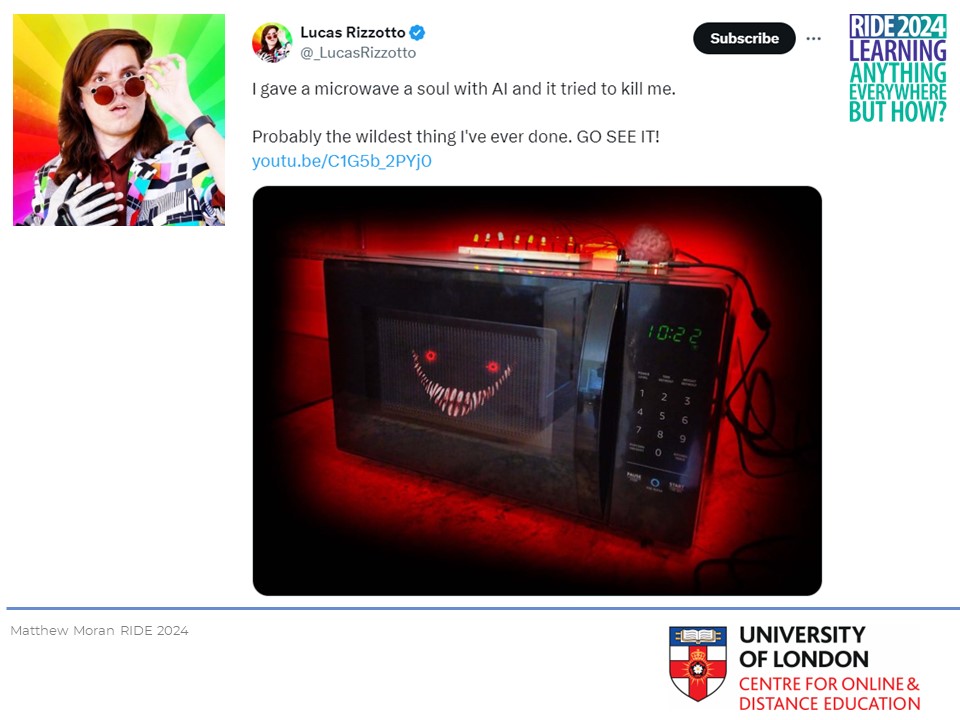

I’d love to talk with you more about speculative design and edtech, but you will find it way more fun to go check out Lucas Rizzotto on the socials for hilarious mad-professor experiments with AI. Lucas famously gave his microwave not only AI emotions but an AI soul, only for the oven to take revenge by trying to cook him alive. Loads of hilarious takes on AI smart glasses too, over on YouTube.

That’s it, except for the references. Thanks for listening, and thank you for having me.